My Homelab for 2024

This is more of a living document than a blog post and I'll keep editing and adding to it as things change.

I thought about doing some version control, but hey, that's what git is for so here's the link.

The Physical

Nodes

| Components | Spec |

|---|---|

| 1x i7-9700 & 3x i7-8700 | |

| 93 GiB | |

| 4x 256 SSD | |

| 4x Gigabit Intel NIC | |

| 4x 10Gigabit ConnectX3 | |

| pve/8.1.3/b46aac3b42da5d15 |

Zeus

(Why a plant in the case? It wanted root access)

(Why a plant in the case? It wanted root access)

| Components | Spec |

|---|---|

| 1x i7-6700K | |

| 32 GiB | |

| 4x Seagate BarraCuda 4Tb Drive (RAIDZ1) | |

| 2x 2Tb random Drives (Pool 2 - RAIDZ1) | |

| NVIDIA RTX 3050 (Patched drivers for transcoding) | |

| 1x Gigabit Intel NIC | |

| 1x 10Gigabit ConnectX3 | |

| pve/8.1.3/b46aac3b42da5d15 |

I run the 4 Dell Optiplexes as Proxmox nodes only, I bought them used for a really good deal.

The, original lab was mainly just Zeus but now he's the granddaddy of all my servers. He's been through more disasters than a Greek tragedy. Still, he acts an extra node in the Prox cluster, my NAS and and runs one of the K8s masters (mainly for graphics related processes). I used to run TrueNas Scale for the NAS but moved to just a instance Debian running ZFS, as I didn't need most of the things TrueNas provided.

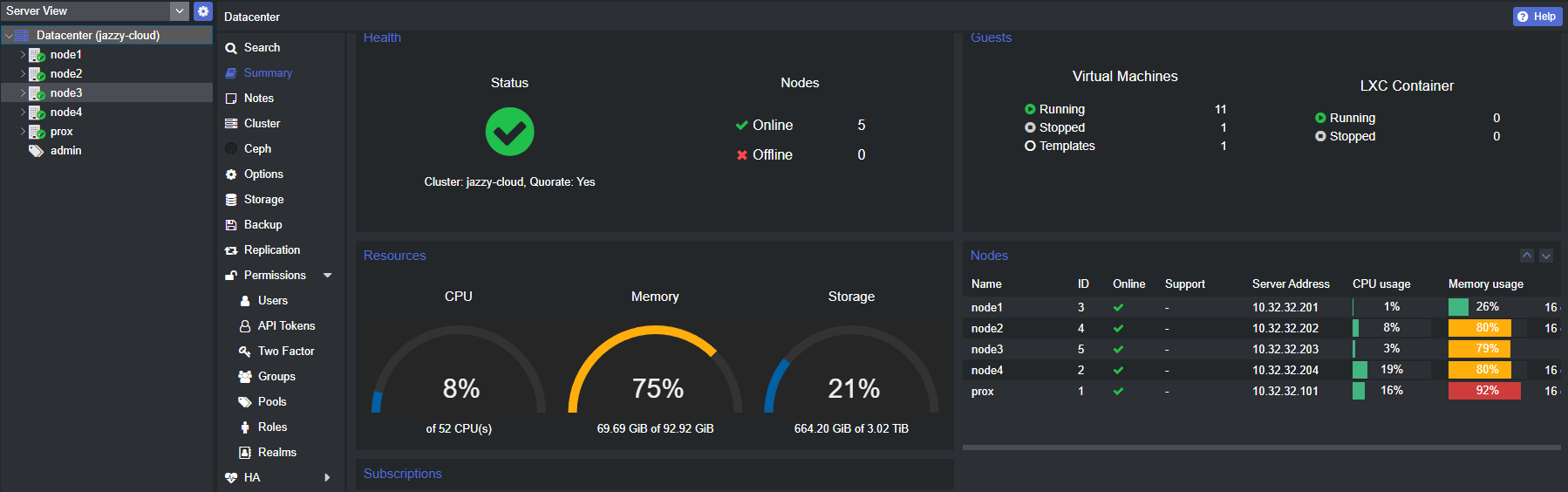

Thus it's a 5 node cluster, with local lvm thins on a each host. I'm experimenting with Ceph and HA but don't have enough OSDs for now to be production ready. Availability is mainly L7 based, but there are a few VMs in the Prox HA.

I run Debian wherever possible and also use it as my daily driver for my PC and laptop.

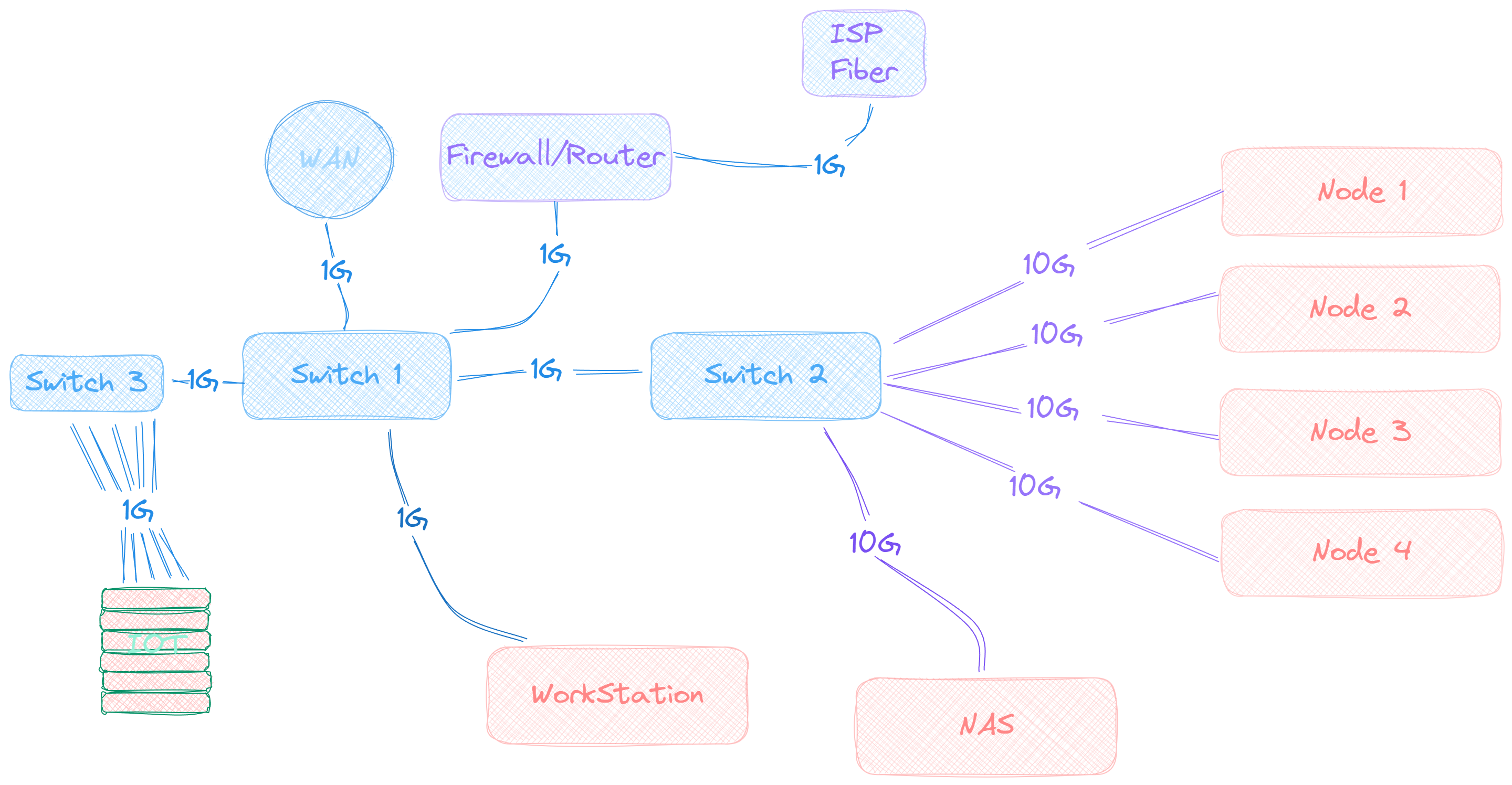

I use two separate bridges for networking, one with the gigabit cards for all VMs, LXCs (these are being moved to K8s), WebUI, and corosync. Then the 10G cards are on a separate bridge, no LACP. This is reserved for the Kubernetes cluster and Ceph/storage bandwidth. I setup spanning tree on the Mikrotik's so I can loose one cable and still be able to reach every node.

Networking

Router/Firewall

I have a fiber gigabit uplink to my ISP going into my main firewall Protectli Vault FW4B running PfSense in HA. I run the secondary instance on Zeus and even though pfsync is enabled, the WAN switch currently only terminates to the Protectli. Thus, I don't route any traffic through Zeus at the moment but if needed I could manually move over the WAN link.

By default everything is rejected both ways, I only open ports to the metallb IPs for my Istio Gateways and I control ingress/egress though them for all hosted apps. I also run Snort and have done a lot of finagling to get it working nicely, though it's never perfect. There are also three VPNs running, a Wireguard for personal uses and an OpenVPN server setup because a specific type of network traffic only allows TCP streams which Wiregaurd doesn't support. And lastly a Wiregaurd for my Work and only work traffic. My goal in the future is to get another fully dedicated firewall box for proper HA.

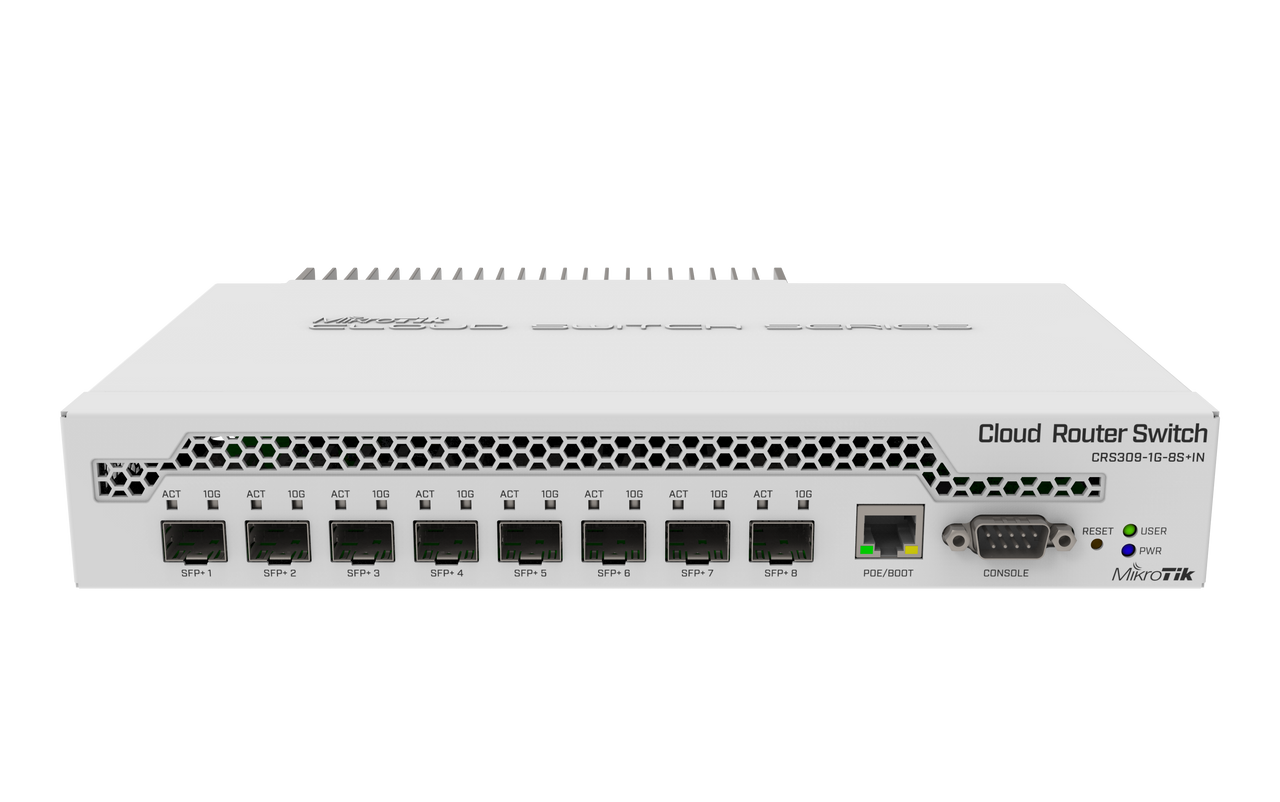

SW1 Core switch : MikroTik CRS309-1G-8S+IN

Nothing fancy, just running SwitchOS.

SW2: W.I.P. about to be CRS326-24G-2S+RM

coming very soon...

AP

Only one Access Point with 3 SSID, LAN, GUESTS and IOT.

VLANS

- LAN : Most trusted things are in here.

- IOT : smart tv, smart light bulbs, and anything I do not trust.

- GUEST : This one is for the GUEST Wifi and only provides WAN access.

- LARGE : PVE, PBS and the ZFS NAS and other storage is here

- ADMIN : Switches, AP, PBS & PVE Webui, SSH, secure VPN traffic, etc.

- CLUSTER: Corosync

Base services

DNS...

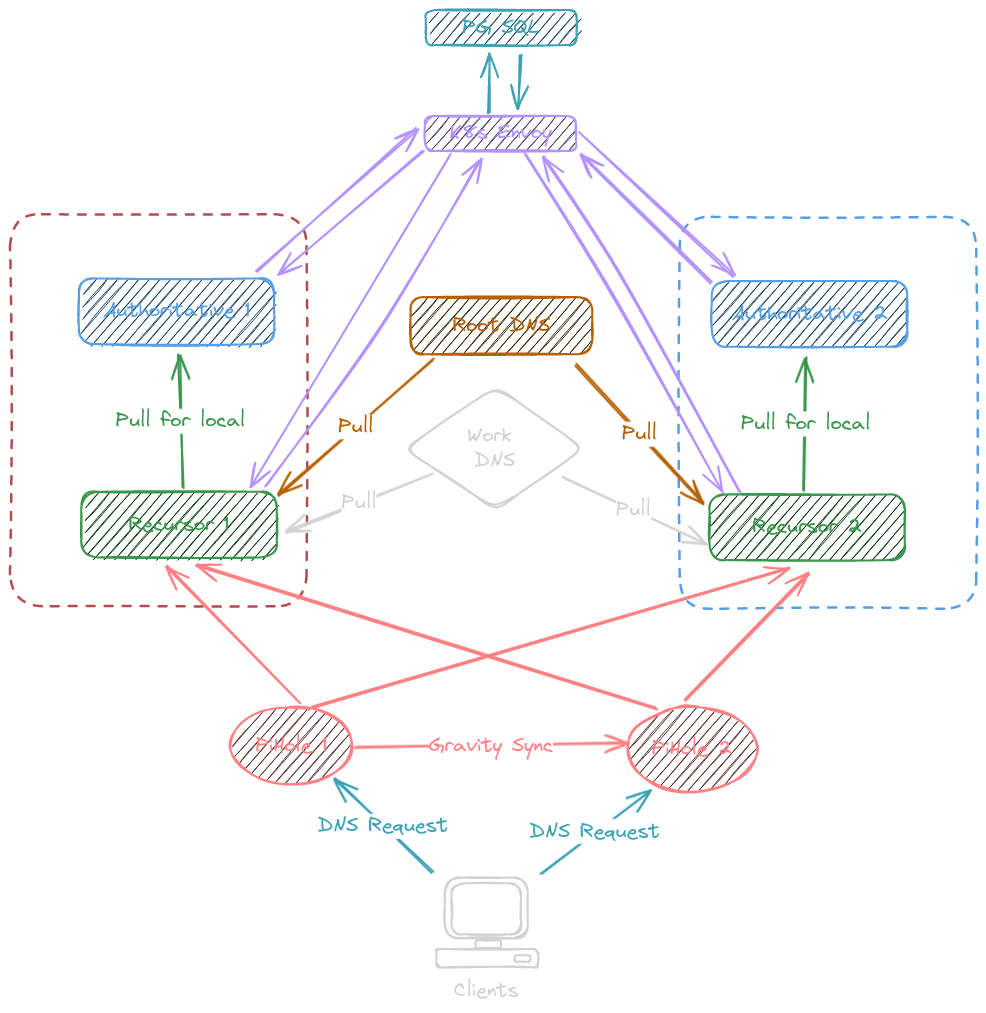

I use two PiHoles for DNS filtering / blacklisting, two PowerDNS Recursors and two PowerDNS Authoritative Servers connected to my PostgreSQL DB in the K8S cluster.

Kea DHCP in my PfSense router pushes the two PiHoles to my clients, servers are using the re-cursors directly.

The PowerDNS solution is pretty overkill for my needs but it was a good learning experiance and if one of my VMs is ever down, at least my DNS stays running :). It does let me mess around a lot with routing and gather a good bit of metrics! I've also been using PowerDNS Admin as a nice way to manage the records.

Git + Ops

Forgejo, Jenkins, and Harbor! All running in the K8S cluster. I'm slowly moving my projects to being hosted on Forgejo and just mirrored to GitHub.

Kubernetes

Set up through some simple Ansible playbooks. I didn't do this the first two times I nuked the cluster but reinstalling all the packages, configuring the mounts, users, etc. really became a pain.

So it's a 3 master (ETCD on masters), 2 worker cluster. Everything in the cluster is configured with FluxCD. I previously used Rancher for easier introspection but wasn't a fan especially while learning. I've gone back the CLI and occasionally Lens. Initially I used Rancher + Helm charts for deployments but as complexity grew, I quickly learned why GitOps is nice.

This is still a W.I.P... I'll have a detailed write up about everything running in the future but as a simple overview for what hosted right now.

- Metallb

- Istio

- NFS Provisioner

- Longhorn

- Cloud Native Postgres (2x)

- KeyDB

- Kafka

- Minio

- NATS (in testing, hopefully to soon replace the above)

- Prometheus

- Grafana

- Loki

- Promtail

- Keycloak (debating moving to LLDAP rust btw)

- Hashicorp Vault

- *Arrs stack + Qbt + Plex (I use my own operator based on this wonderful project, I might make it public soon as it can use Postgress or the chart configs for Qbt)

- Forgejo

- Harbor

- Airflow

- Flyte (highly recommend)

- Jenkins (W.I.P.)

- Then all of my personal web services (this site, APIs, scrapers, etc. I'll also go into these more in the future)